There are rules of thumb for data processing that apply across a wide range of data sources and analysis techniques. These let us ignore some costs and apply abstractions that simplify workflow and enable clean programming interfaces and orthogonal composition of software components. The thing about video is that it breaks the rules.

Here are some of the rules that video breaks:

- Storage is cheap

- Transmission is negligible

- Analysis can be done in the cloud

- Processing steps are independent

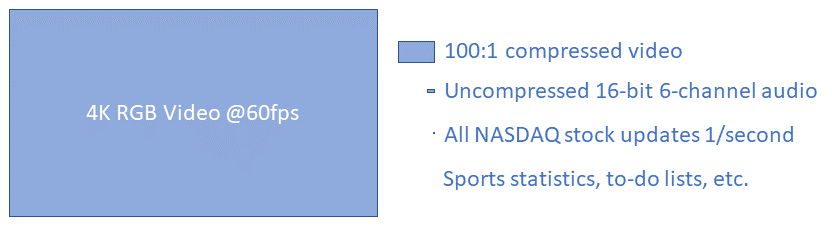

Size: Video’s main problem is its immense size compared to other typical data streams. Raw RGB 4K video with 8 bits per color at 60 frames/second produces 1.5GB/second. Its closest competitor, uncompressed 16-bit, 6-channel audio at 44kHz produces 500kB/second (2800 times less). Second-by-second pricing for all stocks on the NASDAQ exchange stored in 32-bit floating point produces 22kB/second (65000 times less). Sports statistics and to-do lists pale by comparison.

Compression: The typical solution to size is progressive lossy compression, which causes most of the remaining problems. Here’s why this is used:

- Because of its Shannon information content, the maximum theoretical size of a losslessly-compressed 8-bit-per-color video that has four counts of noise per pixel would be 1/4th of its original size (4:1 compression). To do better requires lossy compression.

- Frame-independent lossy compression typically starts to show perceptible quality loss at around 10:1.

- Providing additional compression requires making use of inter-frame coherence; parts of the video don’t change, and large objects move as blocks. By starting with a compressed full-frame image and adding updates only for changes in later frames, progressive compression can achieve higher compression without additional loss of perceptual quality (typically on the order of 100:1 before objectionable artifacts appear).

Here’s how all of this breaks the rules.

Storage is (not) cheap

Even when compressed, a single 4K60 video stream produces around 53GB/hour. Storing video for a two-hour event captured by five cameras takes 530GB. At ten events per month, the annual storage requirement for a single venue would be around 65TB.

On-demand cloud storage costs as of December 2022 range from $6/TB/month through $23/TB/month. These costs accumulate year over year, so in the third year event storage would cost between $12K and $45K. The fourth year would cost between $16K and $63K. Even deep archival storage costs of $1/TB/month would be $2.7K in the fourth year.

(Even if the server, power, and personnel costs are ignored and the client is willing to risk total data loss, a single external drive costs on the order of $18/TB. Cloud-based storage is hard to beat.)

Transmission is (not) negligible

A single 4K60 raw RGB video stream exceeds the GigE capacity of a typical LAN by more than a factor of ten, exceeding even the 10GigE available to typical cloud hosts. For video mixing applications in installations with 20+ cameras, many streams must be brought together on a server. This means that initial compression must happen along with video ingest from the camera.

Even a single compressed video feed is on the order of 100Mbps, which exceeds the 35Mbps upload speeds on cable Internet providers’ gigabit-download plans. This prevents live streaming of 4K60 video from a venue except over fiber-optic connections and requires offline upload to the cloud. A small number of 1080p60 streams can be uploaded over a cable connection if there is reserved bandwidth.

Some cloud service providers charge on the order of $90/TB for data egress from their cloud. The annual transmission cost for the single venue described above to send video of all cameras from all events to a single client would cost nearly $5900. Fan-out of streams via multicast for live events would cost extra. YouTube and other services could be utilized for this; their revenue model is to provide advertisements over your content. These charges are not incurred for video data used internally for analysis, and there is at least one cloud services vendor that provides free data egress; they should be selected for video data that is to be widely viewed.

Analysis can (not always) be done on the cloud

Although an analysis-preserving approach that can achieve 70x+ compression is available for license, typical progressive compression approaches prioritize perceptual quality. These introduce both spatial and temporal artifacts.

- Blockiness: The use of 8×8 or 16×16 macroblocks as the fundamental unit of image space, together with the loss of information, often results in reconstructions that have very clear edges in a regular pattern than has to do with the compression tiling.

- Edge distortion: The reduction of precision on the Fourier representation of the image causes ringing artifacts near brightness edges in the original video.

- Block motion: Higher compression is achieved by re-using portions of previous images that are shifted, scaled, and rotated and then sampled back into the new image. Analysis run on the resulting video will see objects moving according to the compression shift granularity rather than motion in the original video.

- Detail strobing: To enable the viewer to jump between locations and to provide tolerance to loss, full-image frames are periodically inserted into the video. With lossy compression, these images are missing detail that is filled in by the following progressive frames. This produces a periodic strobing in image details that has to do with the I-frame spacing not with motion in the original video.

These artifacts can interfere with image and video analysis, producing mis-estimation of object appearance and motion and even of fundamental image properties such as entropy over time.

To avoid these artifacts, image analysis must be performed as part of image acquisition and before compression. Otherwise, the compression and/or analysis must take these and other artifacts into account.

Processing steps are (not) independent

The need to compress video before transmission and to decompress before analyzing, editing, or viewing interacts with system architecture to produce couplings between processing steps.

The need to store most video on local or remote disk requires predictive prefetch of compressed data into system memory so that it is ready for decompression. This prefetch must trade off latency for bandwidth of the available communications channels.

Progressive encoding means that to access a particular video frame may require first decoding a number of previous frames (typically up to 29). This can require predictive decompression to make the correct frame available when needed for display.

The decompression operation itself requires a number of previous frames to be stored in memory (typically 2-5). For systems that are mixing a number of streams, this can start to strain the memory capacity of a server or video accelerator (GPU or video processing card) and require careful control of a shared cache between decompression thread(s) and rendering thread(s).

Video encoding is a compute-intensive operation that can be done with much higher speed and power efficiency using application-specific integrated circuits (ASICs) than using CPUs. A single ASIC can handle multiple full-rate streams, whereas it takes multiple CPUs to compress a single stream at full rate. This can require carefully scheduling data movement to and from ASIC memory, graphics memory, and system memory.

Reliable transmission to a client requires buffering, which causes latency. Variable bandwidth can require adaptively switching quality levels during transmission, which requires synchronizing switching with I-frame arrival on the new stream. This also requires coordination with the encoder and can involve encoding multiple streams at once during times of transition.

Conclusion

The large size and compression characteristics of video break many rules of thumb for system design with impacts on system cost and complexity. Careful attention must be paid to transmission, storage, and operation on video at all steps to provide high-quality, affordable solutions. Custom encoding and decoding hardware is often required to reach speed and cost targets. Pipelined processing with predictive prefetch from source to disk to memory to accelerator to rendering to network interface is required for highest performance with multiple streams.

All of this requires global system optimization to operate in the presence of installation-specific bottlenecks in bandwidth (cameras to storage servers, storage servers to mix/render servers, render servers to cloud, cloud to client device, etc.).

Epilog

As a case in point, a few months after this posting, Amazon Prime Video posted this description of of they moved from serverless to monolithic processing of their video pipeline and reduced cost by 90% while speeding up processing.